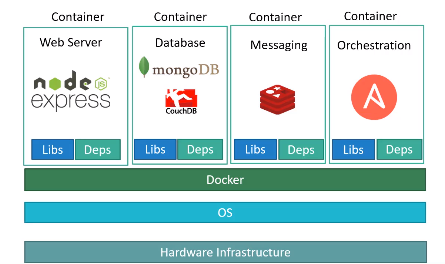

Consider that you have a project in which you need to build an application which has multiple dependencies on technologies for Databases, Web Servers, Messaging and Orchestration. Now these technologies will have their own libraries, dependencies, and compatibility issues with the underlying shared OS kernel. Every time you change something in the application or in the technologies used, you need to ensure that the change is supported by the underlying dependencies and this process can sometimes be tedious. This is where Containers come into picture.

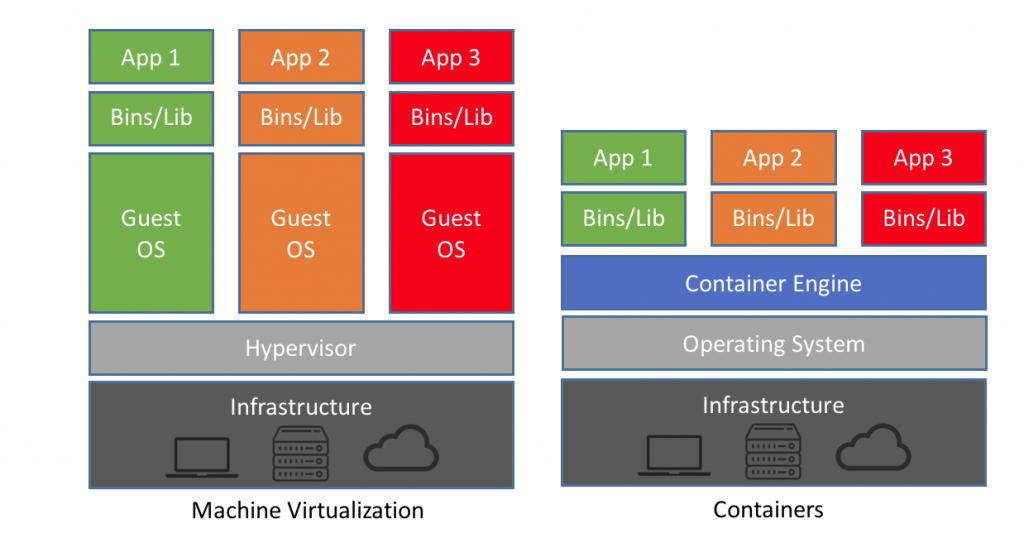

Containers are isolated environments which have their own processes, services, N/W interfaces, mounts just like VMs except that they share the same OS kernel. So it is not possible to create a Container with a Windows OS on top of a host with a Linux OS kernel. Docker is currently the most popular container technology. It provides high level tools with several powerful functions for the end user.

Unlike Hypervisors, Docker will not virtualize and run different OS with different kernels on the same hardware as it is developed to run containers with OS supported by the host OS kernel.

Below are some of the key differences between VMs and Containers :-

So it can also be said that Containers enable more efficient utilization of resources of the host machine.

| Command | Description |

|---|---|

| docker run < image name > | This command will create a container for the image name that you have specified. |

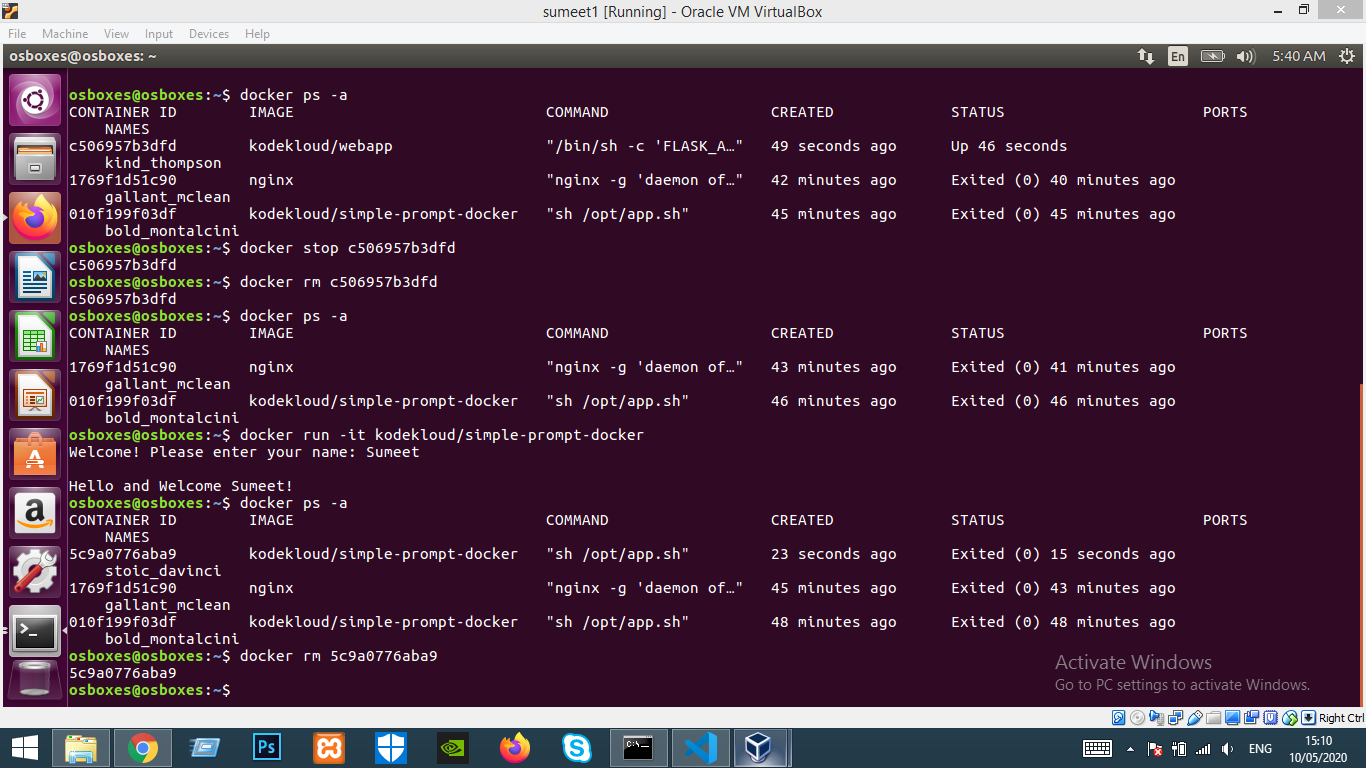

| docker ps docker ps -a |

This will display all running containers. Adding the '-a' will also display stopped/exited containers. |

| docker stop < container name/id > docker rm < container id > |

Used to stop/remove a container. Always stop containers before removing them. |

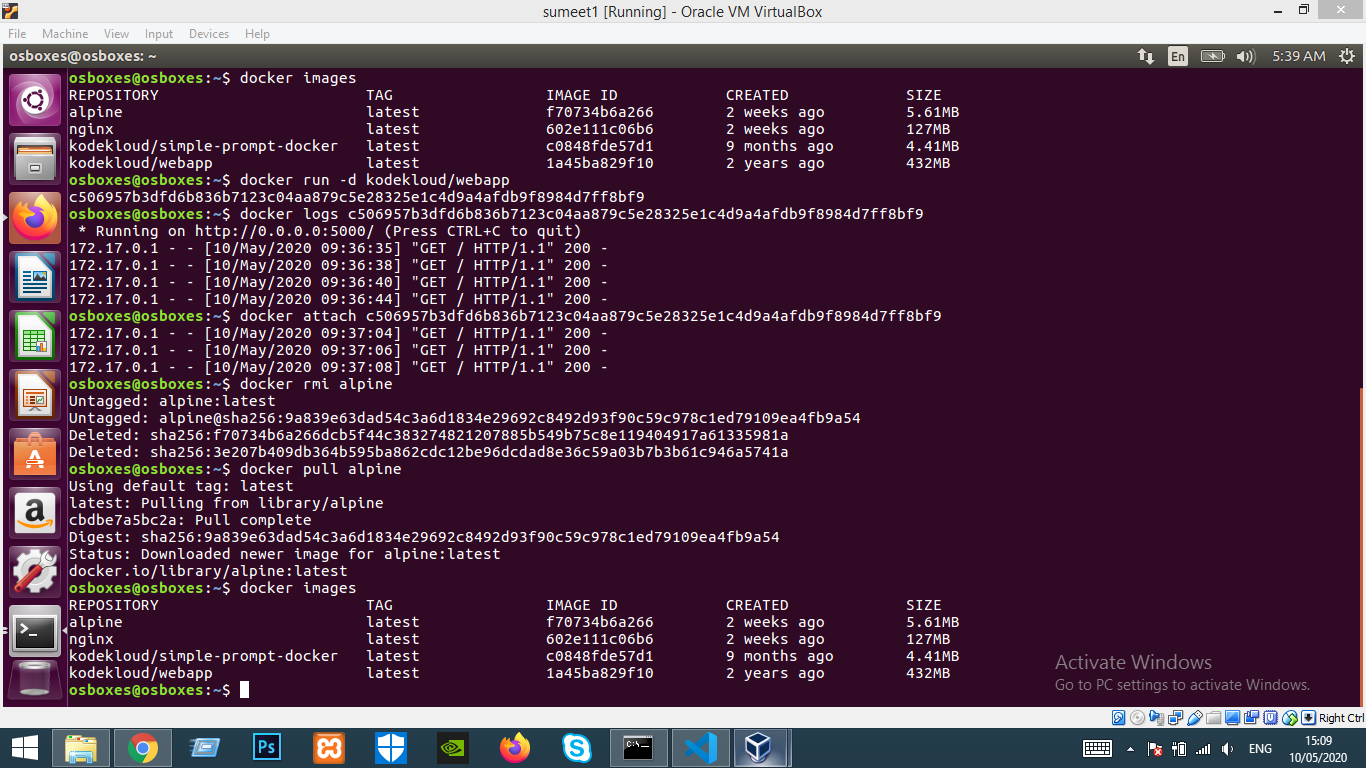

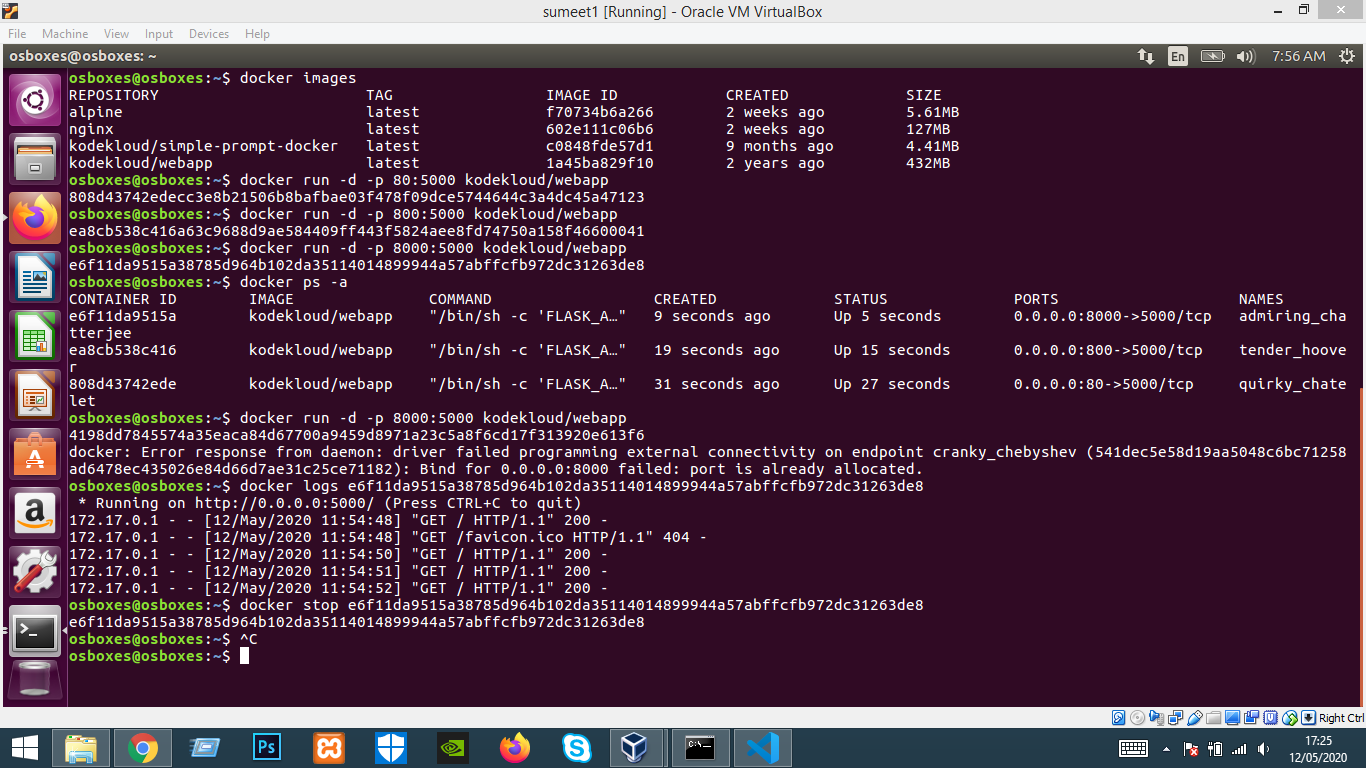

| docker images | Display a list of all existing images. |

| docker rmi < image name > docker pull < image name > |

Used to remove an image / pull latest image. Always stop containers before removing images. |

| docker run ubuntu sleep 5 | Runs an ubuntu image and exits the created container after 5 seconds |

| docker exec < container name/id > cat /etc/hosts (or any other file name) | Display contents from within a container |

| docker run -d < image name > docker attach < container id/name > |

Run an image in detached mode in background using -d i.e. you wont be able to see the logs or other such data and will be directed back to your command line. The container can again be attached to display logs/data but you wont be able to type in command line unless you stop the container. |

| docker logs < container name/id > | Display logs for a container for eg. GET POST calls for a webserver, etc. |

| docker run redis:4.0 | Here 4.0 is used as image tag i.e. docker will run image with release version as 4.0. By default i.e. if not specified the tag is latest. |

| docker run -it < image name > | Using -it, we can run the image in interactive terminal mode which is useful to run applications which require cli input. |

| docker stop $(docker ps -a -q) docker rm $(docker ps -a -q) |

We can use these commands to stop then remove all running containers. '-q' is used to give input of container ids to docker stop/rm command. |

osboxes@osboxes:~$ docker run kodekloud/webapp

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

osboxes@osboxes:~$ docker inspect 0e5028d09b23|grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "172.17.0.2",

"IPAddress": "172.17.0.2",

docker run -p LOCALHOST_PORT:CONTAINER_PORT < image name >

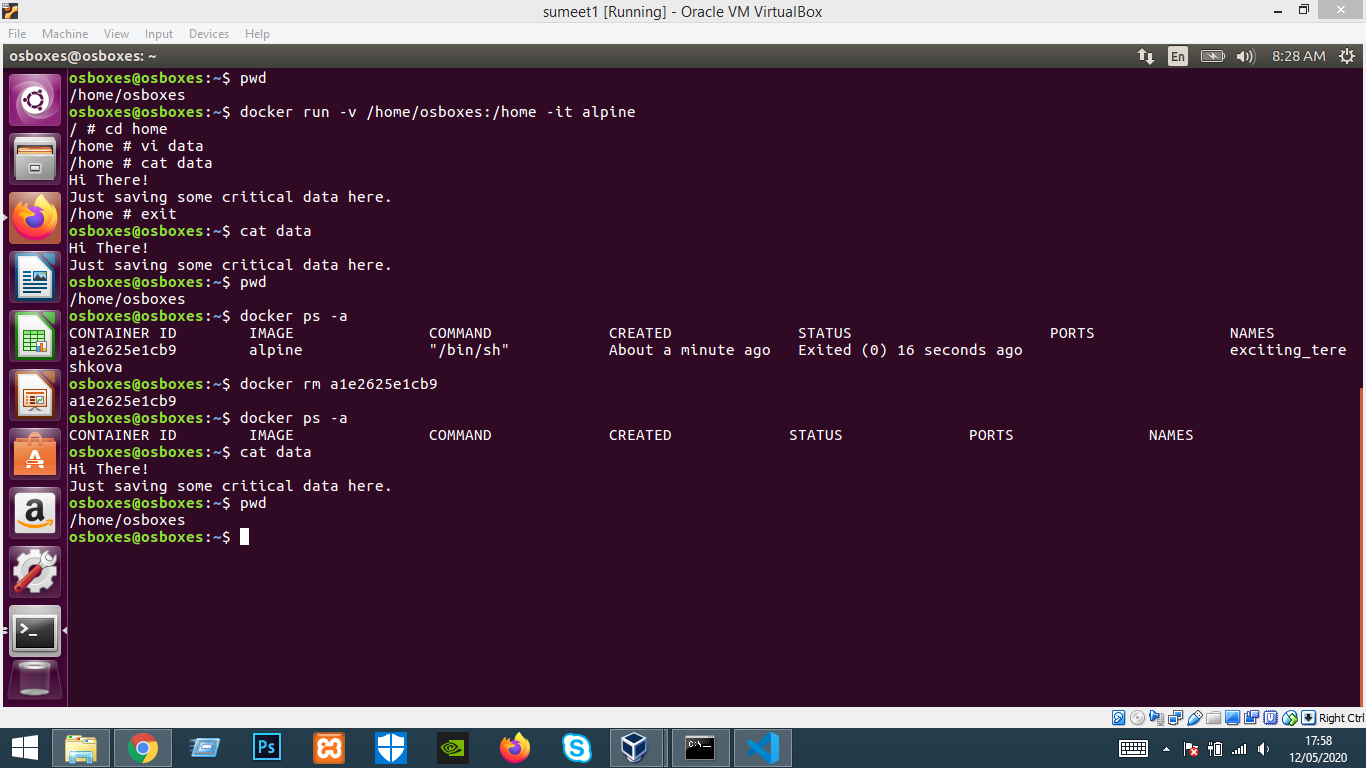

docker run -v LOCALHOST_FILESYSTEM:CONTAINER_FILESYSTEM < image name >

sumeet@sumeet-Inspiron-3543:~/Flask/weather_app_flask_env$ cat requirements.txt Flask==0.12.2 requests

sumeet@sumeet-Inspiron-3543:~/Flask/weather_app_flask_env$ cat Dockerfile FROM python:3.6.4-alpine COPY . /app WORKDIR /app RUN pip install -r requirements.txt EXPOSE 5000 ENV FLASK_APP weather.py CMD ["flask", "run", "--host=0.0.0.0"]

weather_app_flask_env ├── Dockerfile ├── requirements.txt ├── templates │ ├── home.html │ └── result.html └── weather.py

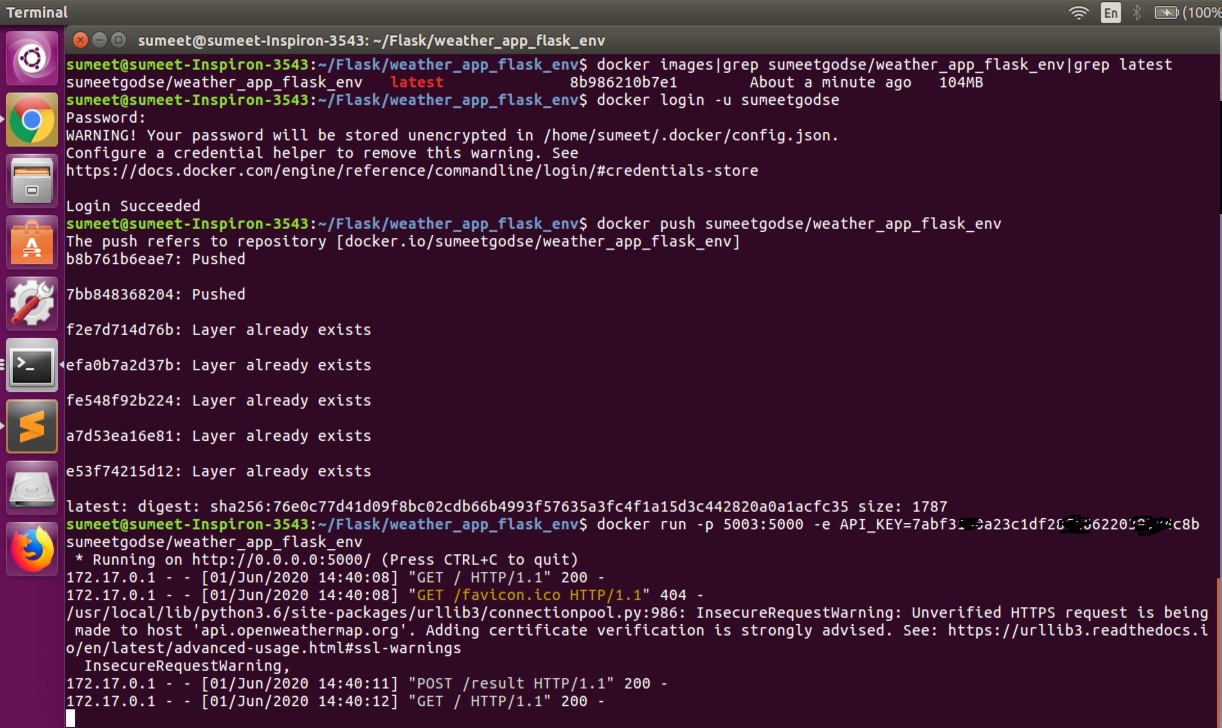

docker build -t sumeetgodse/weather_app_flask_env .